(2012-14) Simulated Task Enviroment for Collaborative Systems Evaluation. Principal Investigator. National Project, grant by FCT (PTDC/EIA-EIA/117058/2010). Funding received: 70.225€. participants: FCUL.

The evaluation of collaborative systems is difficult. This has been noted by two recent longitudinal studies reporting that between one third [Pinelle, 2000] and one half [Antunes, 2010] of the developed collaborative systems end up not being evaluated. Furthermore, [Antunes, 2010] report that within those systems that were evaluated only 10% were laboratory experiments, while other techniques such as case studies and empirical studies are more favoured by the research community.

A possible explanation for this situation could reside on different research orientations: the CSCW (Computer Supported Collaborative Work) community may favor interpretivism, more focused on questions of meaning, over positivism, more concerned with questions of cause and effect. However, our longitudinal study, which analyzed 246 papers published between 2000 and 2008, actually found out a slight preference for positivist evaluations: 25.2% positivist versus 16.3% interpretivist.

Another explanation, which we adopt as research hypothesis, is that laboratory experiments are difficult to conduct in the CSCW context, characterised by complex dependencies between humans, technology, tasks and organizational goals. Laboratory experiments focus on precise events, while collaborative systems comprehend phenomena like awareness, learning, negotiation and decision-making performed at various levels of detail, ranging from the individual towards the group and the organization. This hypothesis is founded on an extensive study of current CSCW evaluation techniques to appear in ACM Computing Surveys [Antunes, to appear].

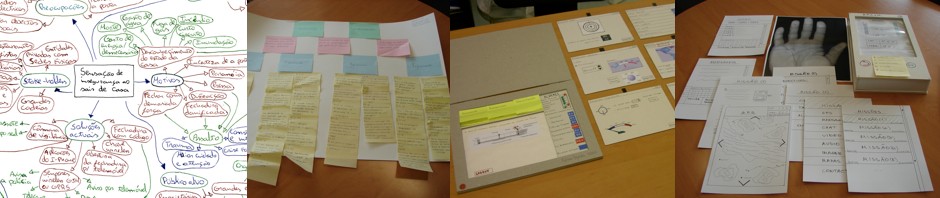

Our standpoint is that the existing CSCW research framework lacks the methods and tools necessary to simplify laboratory experimentation. Consequently, the project aims to accomplish two main goals: 1) Develop and validate a conceptual framework for conducting laboratory experiments with collaborative systems; and 2) Implement and validate a computational tool supporting these experiments.

The evaluation framework will be developed around five fundamental requirements: 1) Control the contextual conditions affecting collaboration. This requirement is at the core of any laboratory approach; 2) Mediate interaction, communication and collaboration. The evaluation concerns human-computer interaction, human-human communication, and individual and collaborative actions; 3) Specify the roles, tasks and actions that may be performed by the users and conduct the experiment according to the specification. Often, during the experiment, it is necessary to suspend the task to inquire the participants about the task, the collaboration, situation awareness and other dependent variables. Thus a specification of when the tasks should be suspended and what questions should be given to the users is also necessary; 4) One problem with any collaborative systems evaluation is that it requires a large number of users, and users tend to rapidly become a scarce resource. The evaluation tool may address this problem by simulating some of the group members, using predefined user protocols; 5) Obtain experimental data and preserve data in context for detailed analysis. The obtained experimental data concerns interaction, communication and collaboration, and may have to go down to the keystroke level when complex cognitive phenomena such as attention and awareness must be analyzed.

The project will evaluate the framework and tool with multiple laboratory experiments. One such experiment has already been done in the area of incident management, providing important insights about the quality of the experimental data obtained by the tool, which includes the exchanged voice messages, user-interface events, and answers to questionnaires. Further experiments will serve to consolidate the data collection instruments and measures, and to validate the research hypothesis.